INTRODUCTION TO “COULD OR SHOULD A ROBOT REAR A BABY?”

By Maree Foley, Co-Editor, Perspectives, Switzerland

Stellenbosch University, Cape Town South Africa offers an MPhil degree in Infant Mental Health (IMH). This degree is the first MPhil in IMH to be offered on the African Continent. This programme is convened by Emerita Associate Professor Astrid Berg and Dr Anusha Lachman. As part of the programme, Professor Linda Richter (Witwatersrand University, Johannesburg, South Africa, invited the students to apply what they were learning about infant mental health to the rapidly developing field of artificial intelligence. The article below, “Could or should a robot rear a baby” (Carmen Andries, Brenda Cowley, Lucky Nhlanhla, Julia Noble, Tereza Whittaker, Elvin Williams, Bea Wirz, Anusha Lachman, Astrid Berg, Linda Richter) provides a collation of ideas and reflections from the students with their teachers. These reflections relate directly to the intersection of IMH principles and practice with the artificial intelligence technology; specifically, that which is directly related to the antenatal, postnatal and early childhood periods of development. Salient questions for further reflection, individually or in a group, appear at the end of this article.

Could or should a robot rear a baby? This question was posed by Linda Richter in an assignment to postgraduate students in the new Master of Philosophy in Infant Mental Health at Stellenbosch University, South Africa. What follows, is a collation of our efforts to address this question. We do this by exploring the potential for robots at different steps in a caregiving role for an infant, such as robots as a womb, as caretakers, and educators. We also explore potential emotional consequences and ethical considerations, as well as exciting possibilities of artificial intelligence (AI)-assisted caregiving models.

Introduction

We live in an era of rapidly developing artificial intelligence (AI) that is revolutionizing the world and our interaction within it and with each other. Some see AI as posing serious threats, illustrated by the open letter submitted in August 2017 by Elon Musk and other CEOs of technology companies to the United Nations, urging a ban on AI in weapons before the technology gets out of hand. On the other hand, no one is untouched by Judith Newman’s book To Siri with Love, which describes how the automated assistant helped her son communicate, and be polite, and how it became his friend. Judith Newman notes in a review that “Siri’s responses are not entirely predictable, but they are predictably kind”. (“To Siri, With Love: A mother, her autistic son, and the kindness of a machine – Judith Newman – Google Books,” n.d.)

Robots like Hello Kitty, companies testing robots to monitor children in nurseries in Japan, and the pervasiveness of digital devices in the interface between parents and children, prompt questions about the technological and economic benefits, and pitfalls of digital reliance and robotic assistance, but more importantly the potential impact on nurturing care as a fundamental need of human beings.

In what follows, we reflect on the concept of nurturing care and then consider what robots could and could not provide for children and families. We then raise some ethical issues that need to be considered as AI applications progress and become commercialized.

Nurturing care

Adult potential to provide, and infant dependence on, nurturing care is a feature of our human evolution and is the basis of human emotional, physical, and cognitive development. Nurturing care comprises the environment and caregiving responses that maintain and promote the health, nutrition, safety and security, responsive caregiving and opportunities to learn that facilitate a child’s attempt to connect to and learn about their world (Black et al., 2017).

Donald Winnicott (1964) stated that “The proper care of an infant can only be done from the heart; perhaps I should say that the head cannot do it alone…” (Winnicott, 1964) (p 105). But what does “proper care”, the “heart” and the “head” really mean?

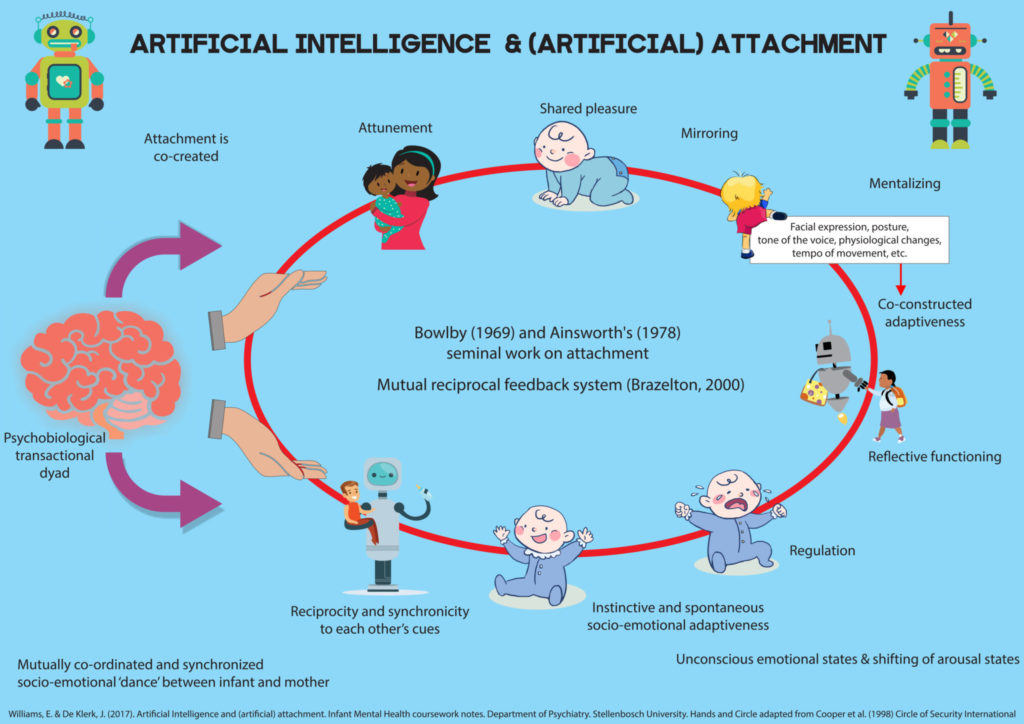

It is now recognized that “proper care” of an infant, needs to be sensitive to the physical, cognitive, and emotional needs of a child. With respect to the head and the heart, the interactions between baby and intimate caregiver are a complex blend of physical sensations – touch, smell, sound, sight, and taste, which Deborah Rosenblatt described as a “reciprocal multisensory exchange” – as well as the caregiver’s responsiveness to cues and the baby’s high sensitivity to contingency.

Mary Ainsworth and others have described qualities such as parental sensitivity and responsiveness as “the mother’s ability to perceive and to interpret accurately the signals and communications implicit in her infant’s behaviour, and given this understanding, to respond to them appropriately and promptly” (Ainsworth, Blehar, Waters, & Wall, 1978).

This sensitivity and responsivity is developed and fine-tuned over time by shared experiences and increasing knowledge of each other. For example, a mother may respond differently to a child crying because she has dropped her toy than to a child who is crying because she has been hurt. A child’s over-reaction to a minor event may alert a caring parent to the possibility that something else is amiss with their child. The cause may be only tangentially related to the present circumstances. A caring and engaged adult is able to use emotional resonance, creative and reflective thought to piece together possibilities of the cause of a child’s distress and respond empathically.

Daniel Stern (Stern, 1985) speaks about emotional attunement, in which the mother communicates to her baby that she is receptive to his or her feelings. According to Stern (1995), these maternal behaviours emanate from the “maternal constellation”, the mother’s instinctual focus on and devotion to her infant, which he and others before him, such as Winnicott, consider to be critical to a child’s development.

Fonagy’s concept of mentalisation, or “mind mindedness” allows predictions of what an infant may be thinking or intending by his or her actions, expressions, and body language. When a mother imitates or reflects their baby’s emotional state in their facial expression, it helps the baby to form a representation of their own emotions. Similarly, on encountering the unfamiliar, infants look to their mothers for clues about how to behave, a response termed “social referencing” (Feinman, 1982). A mother provides clues about the dangers, or safety of situations or people, particularly by means of her facial expressions and bodily gestures.

This social biofeedback leads to the development of a second order symbolic representation of the infant’s own emotional state (Fonagy, Gergely, & Target, 2007) and facilitates the development of the child’s ability to empathise, and understand the emotions and intentions of others.

One of the key functions of a caregiver is to provide appropriate regulation for the infant’s emotions, as the “regulation of affect is central to the adaptive function of the brain and the organizing principle of human development” (Schore, 2001, p.9). This coping capacity in human infancy is mediated by the maturation of brain systems and influenced significantly by the infants’ early interaction with the caregiver. It is suggested that even subtle variations or changes in maternal behaviours can affect stress reactivity in the developing infant.

The development of speech and language depends on the attuned input that the child receives from the caregiver. Language interactions between very young children and adults are transactional in nature, responding to each other and changing over time. Caring adults continuously assess their child’s comprehension abilities through both language and non-verbal cues and change their behaviour to match and encourage development, extending the infant’s range where appropriate through scaffolding.

What could robots provide?

A robot may be able to be responsive and consistent, but could a machine be programmed to support the complexities of a constantly evolving reciprocal relationship of care and trust? We explore this by thinking about the potential for robots as steps in a caregiving role for an infant.

A robot womb?

One day it might be technically possible to grow a baby without a human womb. Three stages in the development of a human embryo and foetus have been described in this automated process. The first is in vitro fertilisation (IVF), which is already routinely carried out in a lab. Fully automating the IVF process is plausible, in the near future. Using already fertilised eggs, scientists have shown that embryos can be grown in the lab for two weeks after fertilization.

The second stage is that of early gestation, prior to around 22 to 24 weeks, when a foetus does not have viable lung function. During this time, the embryo would need to be housed in an artificial uterus. Perhaps unsurprisingly, there has been a great deal of research into the development of artificial wombs; a field of science known as ectogenesis. In a 2011 paper, Dr Carlo Bulletti and colleagues re-evaluated the chances of a laboratory uterus that would supply nutrients and oxygen to an incubated foetus and would be capable of disposing of waste materials. They concluded: “[…] the growth and development of fetuses between 14 and 35 weeks of pregnancy… is within reach given our current knowledge and existing technical tools” (Bulletti et al., 2011, p. 127).

The final phase of foetal development can already be managed outside a mother’s womb. If a baby is born after 26 weeks in a modern hospital, it has very good chance of survival with incubation and support.

In turn, machine pregnancies could be helpful for mothers who are sick, receiving invasive treatment or who are addicts and want a drug free baby. However, the research on babies in utero and their relationship to the mothers’ voice, heartbeat and that of other close family members like the father and siblings, tells us that babies in utero are already actively engaged in creating the building blocks for relationship building. Similarly, the mother and or the parent dyad are also engaged in a getting to know the baby as a person process. So, while plausible, it may be that a robot assisted pregnancy will always need a human person from which the baby in utero can form an interpersonal relationship? As most parents discover, birthing a baby is only just the beginning! The next 18-plus years of nurturing care develop intelligence, language, personality, and humanity.

Robots as educational and caretaking tools?

Robots can be important educational tools for children and may even stand-in as caregivers. For example, Sharkey and Sharkey (2010) list some of the positive responses of parents when they were interviewed regarding the Hello Kitty Robot. These included:

- “Since we have invited Hello Kitty (Kikki –as my son calls her), life has been so much easier for everyone. My daughter is no longer the built-in babysitter for my son. Hello Kitty does all the work. I always set Kikki to parent mode, and she does a great job. My two year old is already learning words in Japanese, German, and French.”

- “As a single executive mom, I spend most of my home time on the computer and phone and so don’t have a lot of chance to interact with my 18-month-old. The Hello Kitty robot does a great job of talking to her and keeping her occupied for hours on end. Last night I came into the playroom around 1am to find her, still dressed, and curled up sound asleep round big plastic Kitty Robo. How cute! (And how nice not to hear those heart-breaking lonely cries while I’m trying to get some work done).”

- “Robo Kitty is like another parent at our house. She talks so kindly to my little boy. He’s even starting to speak with her accent! It’s so cute. Robo Kitty puts Max to sleep, watches TV with him watches him in the bath, listens to him read. It’s amazing, like a best friend or as Max says “Kitty Mommy!” (P2-3)

It is evident that, in these cases the Hello Kitty robot, is doing a number of things right, at least in part to relieve tired and stressed parents with little other support and provide attention and companionship to lonely children.

Another example is iPal, a 90-cm high child-sized babysitter nanny robot [with big eyes and a tablet attached] designed to take on adult responsibilities. (“This Robot Takes Care of Your Children,” n.d.)The robotic baby sitter is able to keep 3-to 8-year-old children entertained for “a couple of hours” without adult supervision. It is able to communicate using natural language, and according to its founder Jiping Wang, “is not a cold, unfeeling machine, but a great companion for your child. iPal’s emotion management system senses and responds to happiness, depression and loneliness: iPal is happy when your child is happy, and encourages your child when he is sad”. (ibid)

Can robots be sensitive, responsive and in tune with a baby?

From the robot’s side, can the process of relationship development be programmed? In humans, it is a complex physical and emotional process that depends in part on the release of oxytocin and vasopressin hormones, physical closeness and emotional intimacy. This natural, organic process would have to be re-constructed through ongoing machine learning algorithms that adapted continuously to the baby’s initiatives and responses. Based on current robotic learning with respect to facial expressions, this might be technically possible, even sooner than we think. But would it work to sculpt the mental and emotional life of a young human being?

The potential of AI to enhance human emotional learning, is highlighted by Judith Newman in the aforementioned book which tells the story of how the electronic personal assistant “Siri” helped foster the communication skills of her autistic adolescent son, Gus. A child with autism who finds it hard to read emotions, recognize emotional reciprocity and struggles with unpredictability, may find it easier to converse with an inanimate and programmed robot-like Siri who gives accurate, predictable and tireless attention and, in the case of Gus, ultimately translated into his ability to have more conversations with other human beings.

In the future, AI is likely to get to a point where a robot may be able to simulate sensitive and responsive caregiving and may in fact do it quite well. For example, Beebe’s second-by-second research on the micro-responses between mother and infant in serve and return interactions (2010) could possibly be learnt by a robot (Jaffe, Beebe, Feldstein, Crown, & Jasnow, 2001). Other skills such as contingent mirroring and non-contingent responsiveness may also be able to be programmed. Robots could also be programmed to not “get it right” 100 percent of the time, to avoid the dangers and deprivations of perfect mothering (Hopkins, 1996). Perhaps 70 percent would be optimal? The robotic caregiver who is not plagued by ghosts in the nursery (Fraiberg, 1987) or overwhelmed by trauma or stressful environmental circumstances, could possibly provide the consistency and containment so necessary in the life of the infant.

The pace of developments is amazing. For example, scientists in Russia claim that they are on the verge of creating an emotional computer which could think like a person, and build up trust, and bond with humans. It will be used to play the role of a person, will understand the context of conversations, keep up with events and set its own goals. The system’s name, ‘Virtual Actor’ was chosen because one of the main functions it will serve will be as an actor, playing the role of a specific person [Prof Samsonovitch, National Research Nuclear University in Moscow]. (“Russian Researchers Launch ‘Virtual Actor’ in 18 Months,” n.d.)

Therefore, it is not too far-fetched to argue that one day, being reared by a robot is not only a possible, but for some children may not be such a bad thing. Perhaps interacting with a robot with a highly sophisticated artificial intelligence may be better than being raised by parents who are neglectful and abusive. Perhaps in situations such as large group orphanages, human infants might do better with the companionship of a robot carer than with little human caregiving at all. Robots would at best be insensitive carers unable to respond with sufficient attention to the finer needs of individual children, but able to be consistent providers of day-to-day care.

This rapidly expanding technology, boosted by the motivating forces of efficiency, multitasking and profit, provide immense challenges for our infant mental health field. It is to some of these challenges we now turn our attention to.

What can a robot not be?

A robot’s ability to read emotions, interpret them, and respond appropriately – its reflective function – would need to be extremely sophisticated to be able to provide the “real-time” emotional responses necessary for successful emotional communication and affect regulation, both necessary for a young child’s healthy development. To enable “flexible, goal-directed caregiving” a robot would need to master empathy and mentalising functions, as well as affect regulation (Feldman, 2015).

If a robot could learn to differentiate the infinite variations of a smile, for example, would it also be able to display them in a way that could be read by a living human being? As the robots become more and more humanoid, there may occur a switch from our usually positive and empathic response of the adult perceiver to robots to a revulsion, a process which Mori described as the “uncanny valley”. The more like a human an entity is, the less there is an affinity for it, because of an increase in a sense of “eeriness” (Valley, Mori, & Minato, 1970). We could hypothesize that somewhere in their unconscious minds, babies will be aware that there is no genuine human heart beating under that well-constructed robotic exterior. When robots do not provide a coherent sensory ‘Gestalt’, the consequence could be an insecure attachment.

Ethical considerations

In their article “The crying shame of robot nannies“, Sharkey and Sharkey (2010) explicitly articulate ethical issues, psychological challenges and potential outcomes for society and especially for children.

While looking at their potential benefits we need to be conscious of the fact that no matter how good a machine is, it will never be fully able to override the intrinsic features of human relationships. Robots might be able to classify emotions and respond with matching expressions but rearing a child requires cultural embeddedness, the personality of the child, and other factors. Melson, (2010), investigated negative consequences of technology replacing human interaction and reported that if children begin to personify robots as living creatures, they are susceptible to develop robotic understandings of humans, bereft of moral standing.

Advancement in technology is bringing AI into our personal lives and creating an illusion that robots are able to understand human behaviour and respond emotionally to us. The risk, especially for children, is that this could lead to misplaced trust in robots. Research has shown that children tend to see robots as alive, and feel an emotional as well as intellectual connection with them. (Turkle, Breazeal, Dasté, & Scassellati, 2006) The question arises: are the children being deceived?

Alan Winfield, an expert in robot ethics, argues “that robots should never be designed to deceive…. their machine nature should be transparent. We’re concerned about vulnerable people – they might be children, disabled people, elderly people ….. – coming to believe that the robot cares for them.” (“Would you want a robot to be your child’s best friend? | Technology | The Guardian,” n.d.) Indeed, that may be the crux of the matter, as infants and young children will not be able to grasp that the robot nanny is not ‘real’ and they will be the ones most in need of protection against deception.

There are also questions of who bears responsibility for what the robot does, for any harm it may inflict? Who will have access to its hard drive where information is stored? Will the child in later life have the right to destroy recordings? It would be timely for this to be thought about sooner rather than when it may be too late. And what about the robot – do we have an ethical obligation to what we have created? “…as robots begin to gain a semblance of emotions, as they begin to behave like human beings, and learn and adopt our cultural and social values, perhaps the old stories need revisiting. At the very least we have a moral obligation to figure out what to teach our machines about the best way in which to live in the world. Once we’ve done that, we may well feel compelled to reconsider how we treat them.” (Simon Parkin, writer & journalist; author of “Death by Video Games”).(“Teaching robots right from wrong | 1843,” n.d.)

Conclusion

Whilst much of the science of the “heart” and the “head” in parenting, is as yet undiscovered, some of the biological and psychological bases of love seen in the behaviour of humans raising baby humans, has been described. We know that parenting has evolved through the long adaptation of mammals and primates to enable infant development to be uniquely fit for human culture through the unique human bond that forms between a baby and their adult caregivers. As well as being central to the healthy development of the individual child, these same emotional bonds are the root from which the complex social systems necessary for human survival stem.

Although it does seem possible that robots could one day create new humans and raise them into adulthood, would such humans see their robot parents as a mother and father in the traditional sense we know today? Rather than perfecting robots, should we not rather put our resources into supporting parents in their important roles, including with the help of AI innovation?

This salient and thought-inciting article lends itself to the possibility of being utilised as a springboard for small group discussions, for example, in a classroom, or among WAIMH affiliate groups. As such, the following reflective questions are offered that could be used to help structure the beginnings of a group-based discussion.

- What IMH principles do you think are necessary to highlight as artificial intelligence technology expands into the antenatal, post-natal and early childhood areas of development?

- James McHale defines coparentingas “an enterprise undertaken by two or more adults who together take on the care and upbringing of children for whom they share responsibility.” Coparents may include members of the child’s extended family, foster parents and or other specialized caregivers. What can we learn from the theory and practice of coparenting that might be applied to the way this new technology is used with the infant in their coparenting context?

- IMH is an intergenerational field. What might it mean for infants raised increasingly by artificial intelligence technology when they become parents and/or part of a coparenting team?

REFERENCES

Ainsworth M.S., Blehar M.C., Waters E., & Wall S. (1978). Patterns of attachment: A psychological study of the strange situation. Oxford UK: Erlbaum.

Black, M. M., Walker, S. P., Fernald, L. C. H., Andersen, C. T., DiGirolamo, A. M., Lu, C., & Grantham-McGregor, S. (2017). Early childhood development coming of age: science through the life course. The Lancet, 389 (10064), 77–90. http://doi.org/10.1016/S0140-6736(16)31389-7

Bulletti, C., Palagiano, A., Pace, C., Cerni, A., Borini, A., & de Ziegler, D. (2011). The artificial womb. Annals of the New York Academy of Sciences, 1221(1), 124–128. http://doi.org/10.1111/j.1749-6632.2011.05999.x

Feinman, S. (1982). Social Referencing in Infancy. Merrill-Palmer Quarterly, 28(4), 445–470.

Feldman, R. (2015). The neurobiology of mammalian parenting and the biosocial context of human caregiving. Hormones and Behavior. http://doi.org/10.1016/j.yhbeh.2015.10.001

Fonagy, P., Gergely, G., & Target, M. (2007). The parent-infant dyad and the construction of the subjective self. Journal of Child Psychology and Psychiatry, and Allied Disciplines, 48(3–4), 288–328. http://doi.org/10.1111/j.1469-7610.2007.01727.x

Fraiberg, S. (1987). Selected Writings of Selma Fraiberg. (L. Fraiberg, Ed.). Columbus: Ohio State University Press.

Hopkins, J. (1996). The dangers and deprivations of too-good mothering. Journal of Child Psychotherapy, 22(3), 407–422.

Jaffe, J., Beebe, B., Feldstein, S., Crown, C. L., & Jasnow, M. D. (2001). Rhythms of dialogue in infancy: coordinated timing in development. Monographs of the Society for Research in Child Development, 66(2), i–viii, 1-132.

Melson, G. F. (2010). Child development robots: Social forces, children’s perspectives. Interaction Studies, 11(2), 227–232. http://doi.org/10.1075/is.11.2.08mel

Newman, J. (2017). No Title. HarperCollins.

Russian Researchers Launch “Virtual Actor” in 18 Months. (n.d.). Retrieved November 9, 2017, from http://www.chinadaily.com.cn/beijing/2016-10/27/content_27335325.htm

Schore, A. N. (2001). Effects of a secure attachment relationship on right brain development, affect regulation, and infant mental health. Infant Mental Health Journal, 22(1–2), 7–66. http://doi.org/10.1002/1097-0355(200101/04)22:1<7::AID-IMHJ2>3.0.CO;2-N

Sharkey, N., & Sharkey, A. (2010). The crying shame of robot nannies: An ethical appraisal. Interaction Studies, 11(2), 161–190. http://doi.org/10.1075/is.11.2.01sha

Stern, D. (1985). The interpersonal world of the infant. New York: Basic Books.

Stern, D. (1995). The Motherhood Constellation. New York: Basic Books.

Teaching robots right from wrong | 1843. (n.d.). Retrieved January 10, 2018, from https://www.1843magazine.com/features/teaching-robots-right-from-wrong

This Robot Takes Care of Your Children. (n.d.). Retrieved November 9, 2017, from https://www.nextnature.net/2016/10/robot-nanny-adult-responsibilities/

To Siri, With Love: A mother, her autistic son, and the kindness of a machine – Judith Newman – Google Books. (n.d.). Retrieved December 15, 2017, from https://books.google.co.za/books?id=GMycCwAAQBAJ&pg=PT99&dq=Siri’s+responses+are+not+entirely+predictable,+but+they+are+predictably+kind”.&hl=en&sa=X&ved=0ahUKEwjmivWfu4vYAhWJOxoKHUsTDIIQuwUIKzAA#v=onepage&q=Siri’s responses ar

Turkle, S., Breazeal, C., Dasté, O., & Scassellati, B. (2006). Encounters with kismet and cog: Children respond to relational artifacts. … Media: Transformations in …, (September), 1–20. Retrieved from http://web.mit.edu/~sturkle/www/encounterswithkismet.pdf

Valley, T. U., Mori, M., & Minato, T. (1970). The Uncanny Valley, 7(4), 33–35.

Winnicott, D. W. (1964). The Child, the Family, and the Outside World. New York: Penguin Books.

Would you want a robot to be your child’s best friend? | Technology | The Guardian. (n.d.). Retrieved November 13, 2017, from https://www.theguardian.com/technology/2017/sep/10/should-robot-be-your-childs-best-friend?CMP=share_btn_link

Authors

Carmen Andries, Brenda Cowley, Lucky Nhlanhla, Julia Noble, Tereza Whittaker, Elvin Williams, Bea Wirz, Anusha Lachman, Astrid Berg, Linda Richter

Stellenbosch University, Western Cape, South Africa